I was part of he first batch of beta users to try out the world's most advanced image diffusion model, DALL-E 2, in Spring 2022 before it was released to the public. I was a member of Open AI's artist access program and provided direct feedback on different versions of the DALL-E model to the Open AI team. Below is an archive of my early experiments: a prompt library, custom typeface, and image gallery.

Using DALL-E

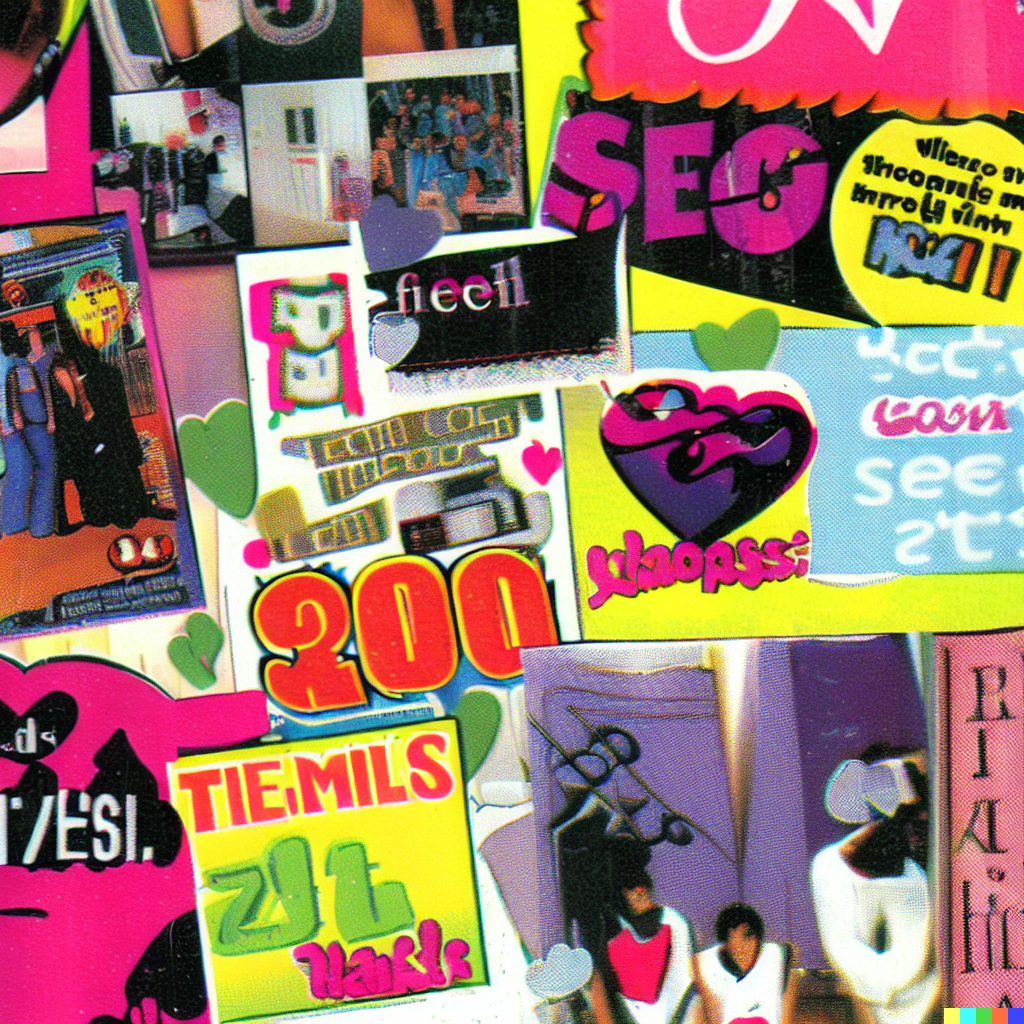

The experience of using DALL-E for the first time is pretty powerful. You enter a 400 character description or prompt into a text box, and a few seconds later you have 4 original compositions based on your instructions. The more detailed and specific you get, the more unique and accurate your composition. For example you could ask for a " fish bowl", or you could ask for "a scanned drawing of a fishbowl from a punk rock zine with cut-and-paste collages and hand-drawn artwork". The only limit is your imagination, vocabulary (more on that later), and the 650M reference images scraped from the internet that DALL-E is trained on.

It's a pretty transformative workflow, one that enables a designer to be less worried about technique and more focused on creative direction earlier in their process. You act like a textual photographer- describing the angle, composition, lighting, mood, and subject of your piece. The output images are imperfect, they often add extra body parts, spell words improperly, and generally lack consistency. But DALL-E makes for a powerful aide to generate assets, build references and develop concepts.

Prompt Library

There are now thousands of online resources dedicated to the emerging field of prompt engineering, or the process of developing and optimizing instructions for generative AI models. I learned from my own experimentation that getting the basics of image generation down is simple, but figuring out the right keywords to use in your generation is more of a complex task than it seems. Having a large vocabulary, knowledge of media production techniques, and understanding of art history makes you a much better DALL-E user and generates much more unique and powerful results.

I began to develop my own style reference guide to document interesting keywords DALL-E users could incorporate into prompts to create unique outputs- no art background required! While many much more comprehensive guides to prompt engineering for DALL-E exist today, in spring of 2022, almost no resources were available. Looking back at this project is pretty full circle. Today in my day job I work on more complex prompt engineering with LLMs, optimizing them to perform tasks related to life sciences.

Custom Typeface

DALL-E isn't too great at writing words, but it can do a decent job at generating individual letters with the right prompt. I came up with the concept of designing a font utilizing DALL-E source images. I choose a vintage letterpress style for the project to play up the uniqueness, variations and imperfections of each individually generated character. I generated a few dozen version of each letter, choose the best candidate, masked and edited using photoshop, and then assembled the final product using FontStruct. The font is free to download via the link below :).

I love the idea of using AI tools to generate assets for designers to use in their work. some project ideas I'd encourage others to explore: collage packs, photoshop brush sets, dust overlays, textures, shapes, stamps, icons, wallpapers, and mockups.

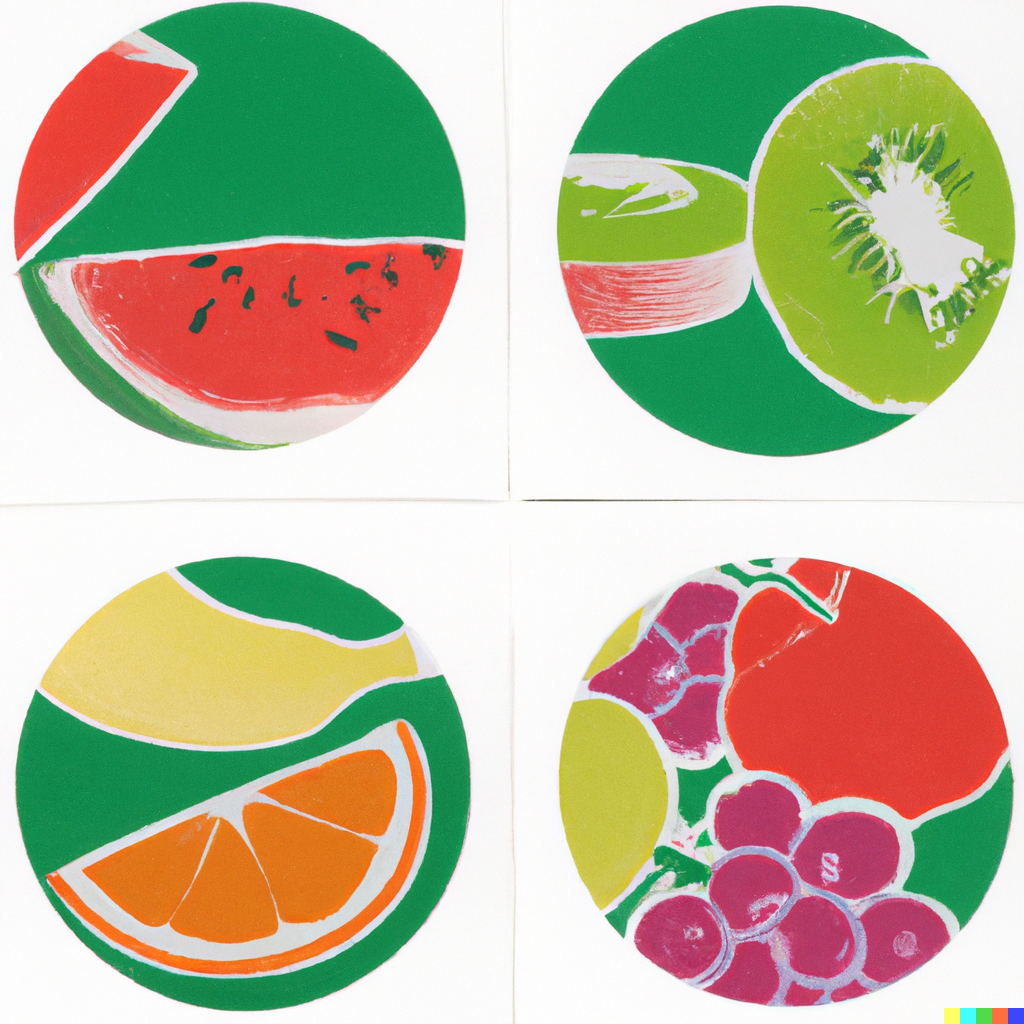

Image Gallery

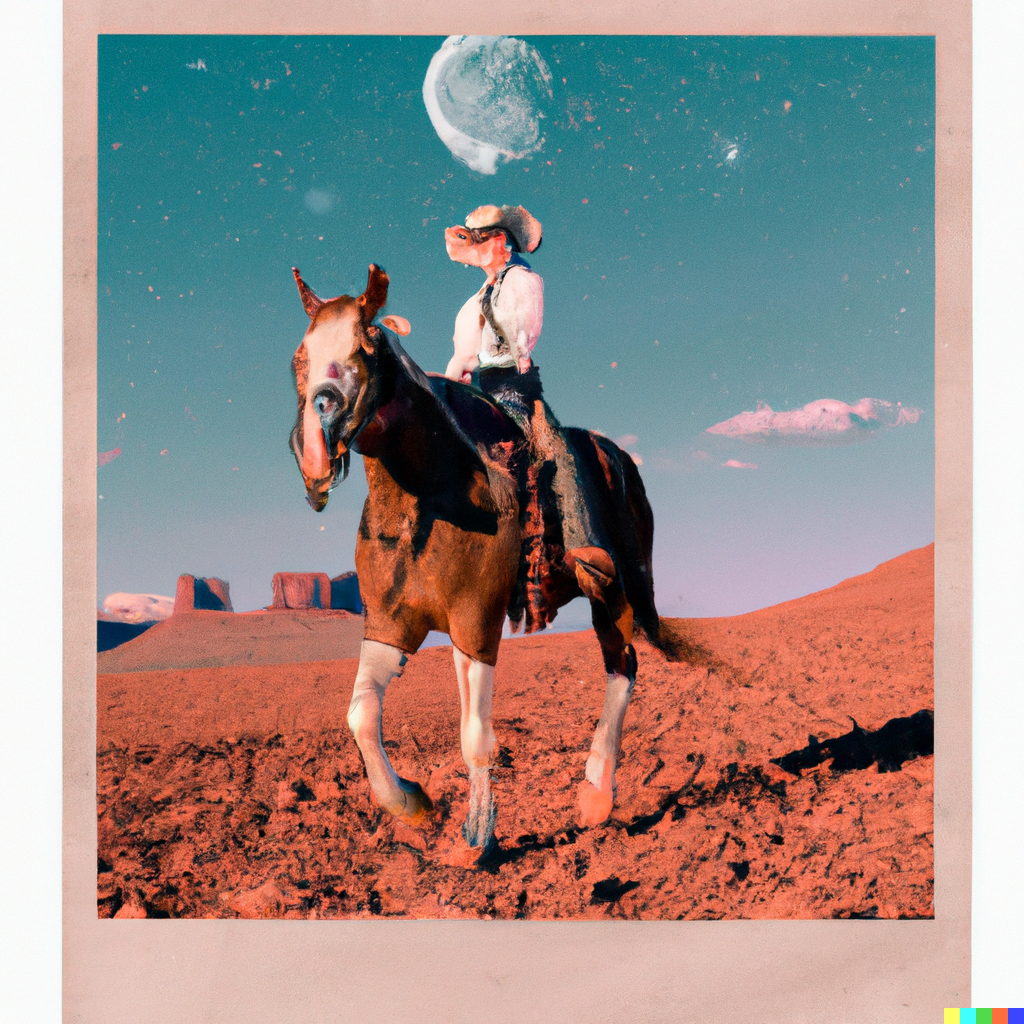

Below is a gallery of some of my favorite images I generated with DALL-E 2.

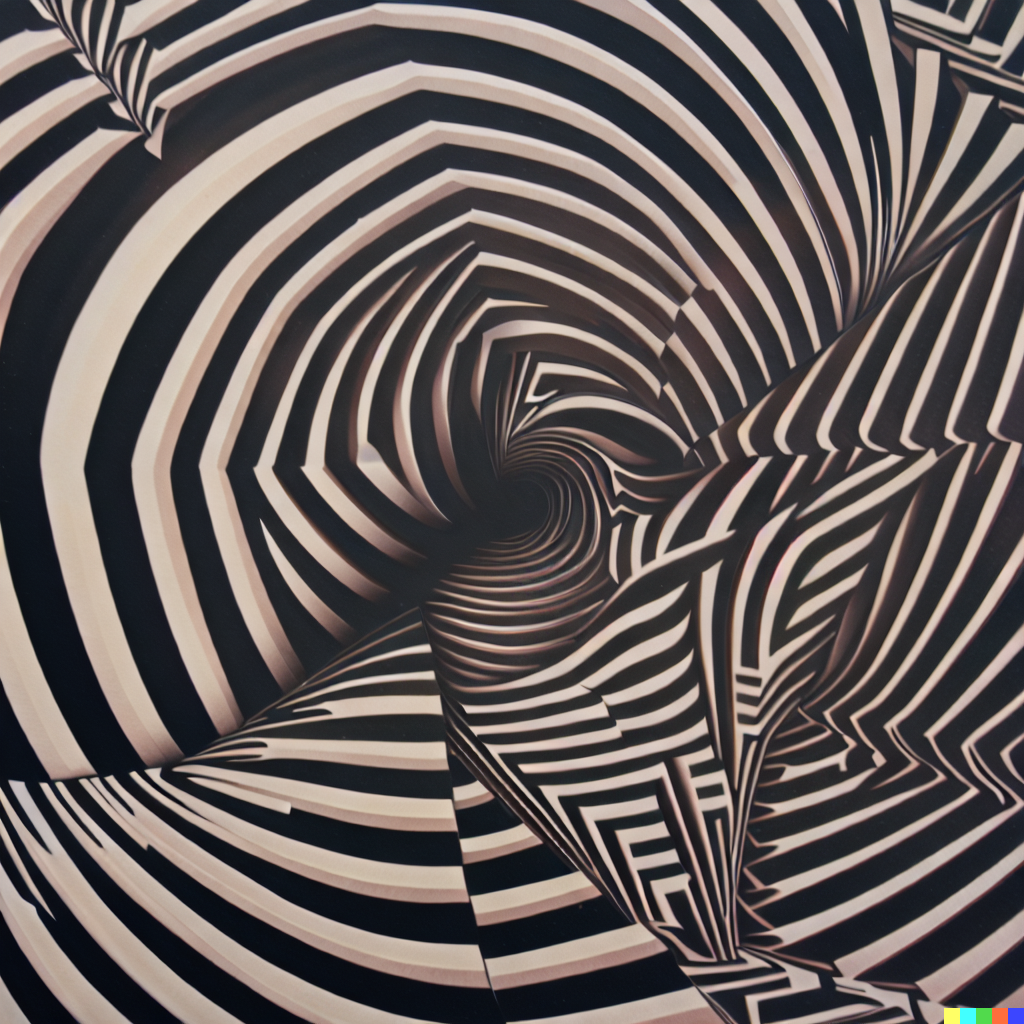

The name DALL-E is a portmanteau of the robot WALL-E and the surrealist artist Salvador Dali. I think that name is pretty fitting because some of DALL-E's most interesting outputs come from blending two uncommon styles or concepts together. For example "an Indian stepwell in the style of MC Escher" or "an editorial style polaroid of a cowgirl riding a horse on mars".